Search Engine Optimization is not an easy as all we know, but I’m sure most of us drive right into analysis and know how to perform SEO audit of your website or friend’s website.

SEO Audit needs a little planning and ensures nothing to be slip through the cracks. First of all, you can identify all tricky areas that need improvement and create an action plan to correct them.

Secondly, a good SEO Audit will perform well and keep your website up-to-date according to advanced growths in the search marketing.

What is SEO Audit?

Before going to ahead ‘how to perform SEO Audit of a website,’ I want to describe the basic information about SEO Audit – what is it and what to expect from it.

SEO Audit is a process that we use to analysis the healthiness of website in some areas. The auditor will check the internet site beside the checklist and arise with approvals of what needs to be fixed and change. In this way, your website search engine ranking is enhanced.

What to Expect from SEO Audit?

There are three things you should expect from the SEO audit.

- A description of the latest state of the website. This will give you analysis detail how your website is performing in social media, search engine, the number of external/internal links and much more information related to your site state.

- An action list based on SEO audit checklist with explanations for each and everything of the list about your website.

- A report labeling an ample internet marketing plan for taking benefit of all available sources of traffic and chances on the internet and not just SEO.

Pre- Audit Checklist Process

- Google Analytics

- Google Search Console

- Bing Webmaster Tools

Google Analytics

First, you make sure you’ve admin access of Google analytic of your website. Google analytic is a tool that gives the full detail about your visitors, a number of readers, their locations and devices they are using to access your website, as well as most popular pages on your website.

Naturally, you can use the data to up-to-date yourself to your website’s landing pages and easily implement it on your website. It is entirely free.

Google Search Console

Google analytics is best for monitoring visitor behavior but won’t provide you more information about your website interaction with search engine spiders. For that, you need Google search console.

With this, you can regulate which content will be indexed and crawled by search engine, as well as discover inbound links, keywords which have led to your site being found and estimate mobile friendliness, etc.

Bing Webmaster Tools

Google is not only the search engine tool that is brilliant. Bing webmaster tools can also help you in your SEO audit because it works similar to Google. By suing this, you can get the detail reports on how better optimize your website, identify all of your competitor’s backlinks, research keywords, and other suitable things.

Not all of them are vital, but they will help to make the process easier.

Let’s get started!

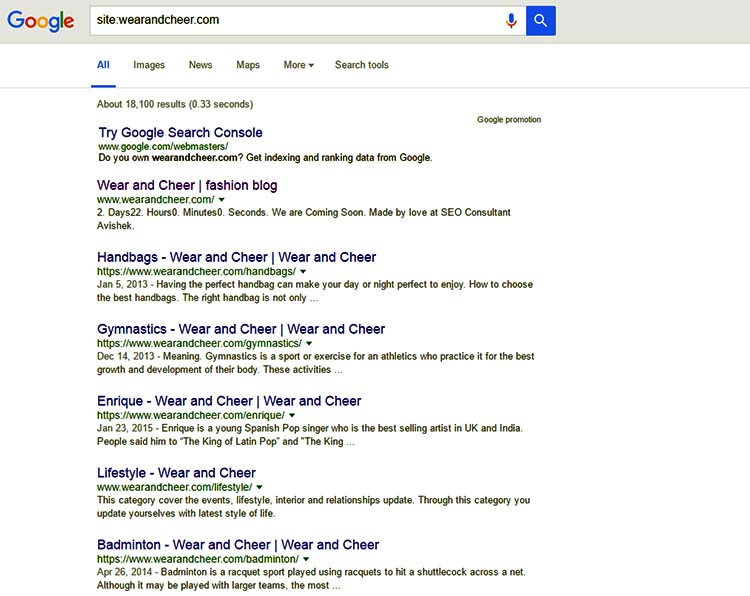

1# Site Crawl

Take a start with web site index in Google to relate what is organism indexed with your yield from a crawl. Just, go to the Google.com and type “Site:http://www.domainname.com” in the search bar and see what Google has indexed. For example, I used my own website wearandcheer.com, and you can see that wearandcheer.com has 18,100 pages in Google’s index.

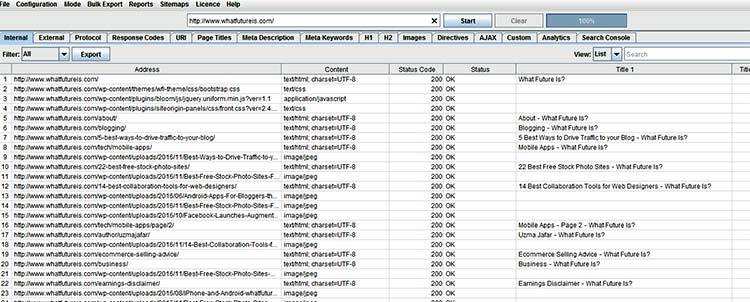

For the real and background crawl of the site you use the different tools. You can use the Screaming Frog (free for just 500 URLs and $99/year) and Beam Us Up (free) for the website crawl.

If you use Beam Us Up, it is very easy to use. You just add your URL and click on the start button. The crawler will begin working continuously in the background.

In the Screaming Frog enter the website URL you want to crawl. It starts working and when it is 100% complete, you can distribute into a CSV or Spreadsheet. With this, you’ll able to analyze the latest state of your website. You’ll gain insight on page errors, duplicate Metadata, on-site factors, site files, and links, etc.

This report will work in your website SEO audit as the blueprint.

2# only one Version of the Website is Browseable

Suppose people browse your website through all these methods:

- http://domainname.com

- http://www.domainname.com

- https://domainname.com

- http://www.domainname.com

Only one of them should be accessible to the browser and others should be 301 sent to the official version.

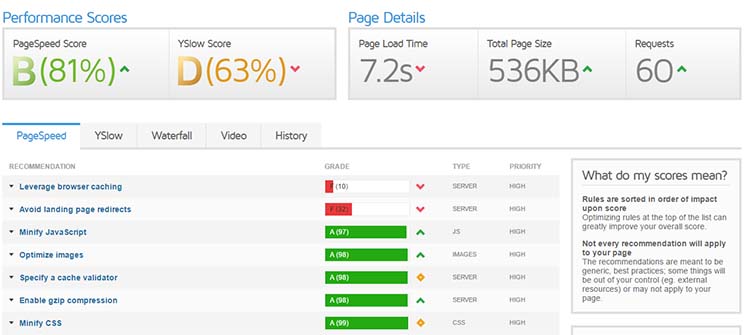

3# Website Speed

As we know, website speed is an amorous factor that is not just necessary for the Search Engine Results Page (SERPs), but also important for visitors visibility. Different tools you can use for website speed checking but Google’s PageSpeed Insights tool enable you to check your website speed for mobile and also for desktop. Apart from that, it gives you some helpful suggestions how to improve performance.

Other than that, a lot of speed checker tools are available in the web market. Some of them:

- Pingdom

- KeyCDN Speed Test

- Up Trends

- Yellow Lab Tools

- Google Chrome DevTools

- GTmetrix

- DareBoost

- WebPageTest

GTmetrix is another tool we use to check the website speed, and it generates such type of data that is showing you full detail of your website speed.

4# File and URL Names

Sometimes we’ll see some page URLs and names rewritten from the software and these are unreadable. Such type of URLs or page names are not findable and logically nor related to target keywords. For example:

- http://www.domainname.com?number=348urtet/

The first URL is a good and second is not. We know that session ID’s should also be expelled from website URL and left in cookies.

One more thing I look in the URL, filenames, and Meta is capitalization versus lower case. A good way is to separate keywords with hyphens nor underscore and make sure all URLs patterns are same.

In some URL you look out #s. Commonly developer or software will use in URL to change content on a page without page changing. It is the fantastic trick but when you use instead of , you get http:/www.domainname.com#privacy and Google will ignore it because Google ignores everything after the # in URL.

Due to this might be something doesn’t happen that you want.

5# Analyse Search Traffic

The aim of SEO audit is to identify different ways to increase website traffic. So it is a necessary to take a look at the current performance of the website. Google Analytics is the best tool that explores the reports very quickly.

First, we will see the latest search traffic report of the site.

6# Keywords

Most people have some information which they’d like to be targeting, but they won’t know which keywords actually being ranked for. Google Webmaster tool is a good place to find the content keywords.

Besides that, Raven Tools, SEMrush.com, and SpyFu.com are some great tools for content keywords.

You can also find the keywords ranking and competition level by using these tools like Authority Labs, SEO Book Free Rank, and Moz Rank Checker. Make a list of short and long tail keyword that are being targeted and start to build keyword concept spreadsheet.

Google AdWords keyword planner tool is a good place where you can create a spreadsheet of your keyword content, and it is free. Insight on paid search if you find a keyword is highly competitive on AdWords, then it is similarly associated with organic search.

Keyword research tends to be most time-consuming when it comes to site audits, but it always exposes the most awareness. By using above sources, you get the data you need, then spread the information into a spreadsheet and start organizing, ordering and highlighting.

Once you do, you’ll be astonished by how much you learn about the business and its competitors.

7# Content

Now you understand and have the proper grasp on website keywords. It’s mean now you will be able to describe it really. It is a time to look at on-page SEO.

Are the keywords in the each page content?

You can use Moz on-page grader and Internet Marketing Ninjas Optimization tool for checking the keyword quantity in the each page content.

Is your keyword reflected your title page? Is your keyword more attractive or geared more visitors on the website?

Are the keyword in your Meta description and heading tags? Are there any poor grammar or misspelling? (In short words, your content quality is high or not).

Finally, make a note of which keywords are more informational and could be used for content development.

8# Duplicate Content

Everyone knows Google hate duplicate or poor grammar content. If your site has too much duplicate content, your site can get crushed by Google Panda. So be careful, remove the duplicate content on multiple pages on your site because it is dangerous for website health.

You can check duplicate content on your site quickly by buying the Copyscape account. For 200 URLs you can buy just $10. You can check:

Are there duplicate versions of the homepage? Are there any other duplicate content identify? What is affecting this?

Duplicate content is bad for SEO because the search engine doesn’t know which variety include or exclude in your index.

Most time they will only give you any credit and authority to the strongest, most imposing style site. Your content could convert a fake result.

Some corporate criminals of matching content are:

- Printable pages

- Post IDs and rewrites

- Tracking IDs

- Extraneous URLs

You can also discover if you could be used Rel=Prev/Next.

This is an HTML link component recognize the relations between module URLs in pagination. Search Engines like this practice. The first-page order gets the importance in the SERP.

9# Rel=Canocical

Rel=Canocical is an important tag and very helpful for the data driven sites. It tells the search engine where the original data is then it’ll crawl and make decisions. It is very easy to add in the HTTP header of a site in the .htaccess or PHP.

Further, it is just a clue to a search engine but doesn’t shake how the page has exhibited any redirection at the server level.

10# Links

The Internet is built a ton of sites and clicking on links is a gateway to exploring them. They’re basically distributed into internal links (same domain’s different pages are linking to each other) and offsite links (those links that link to your website or links that you link out to from your website).

Internal Linking

Internal Linking is imperative for your website because you want people to stay on your website more time. If you don’t use good internal linking strategy, then people don’t stay on your website, in return people bouncing off your site.

There are many tools you can use for checking your linking structure which is most important to know. Another significant factor is that there are no broken links. You can check broken links by using the WordPress plugin that can automatically alert you if any broken link is identified.

External Links

External Links or Backlinks sometimes stated to as inbound links are other websites linking over to your website. These links are more important for SEO and increase the popularity votes for your website by Google, Bing and other search engines.

There are many signals intricate that help your website rank, but backlinks still come into comedy. There are several issues to be worried about, with backlinks. A complete crawl of your website will be helpful to know who links to you. What page links to you?

There are many tools my favorites are:

- MajecticSEO

- Moz Open Site Explore

- Google Webmaster Tools

After the complete crawl, it is time to look the superiority of the backlinks. Why are you adding these links? Does your site have lots of backlinks? And how many are followed or nofollow? How many are 301 redirects etc. ?

This all information you can look at Google Webmaster Tools or disavow. When you complete your backlinks list, you’ll be able to see the ton of info that could help you to determine why you’ve problems, attacks, and rankings.

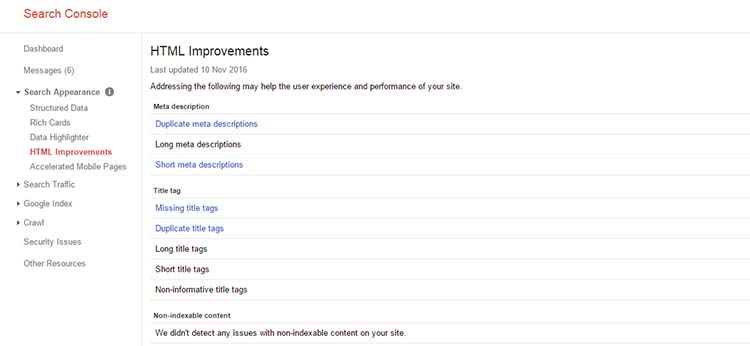

11# Gather Data from Google Search Console

Starting off the article I explored the basic information about the Google Search Console, and now I’ll tell you how you get data from it.

Google Search Console is an excellent webmaster tool, will give us some helpful data for SEO audit.

Look for Crawl Errors

First of all, you will take a look if Google is reporting any issues through site crawling.

Crawl > Crawl Errors

Where you can see Google reporting some not found (404 errors) and Access Denied (403 Errors).

These 404 not found errors are thrown by old services/tags/ products which have been deleted from the site. In this situation, I’ll recommend you that removed tags or products’ are 301 redirected to similar product or parent category.

By this, any link will preserve their equity that the pages may have assimilated, which would be lost with the 404 errors.

The 403 errors required some in-depth research to find out how Google is discovery them.

Check for HTML Improvements

Search Appearance > HTML Improvement

With this, you can take a look at the HTML Improvement report to get the reviews of any on-page issues that Google has created when indexing the site.

In this case, Google saying the different issues like duplicate Meta description, long Meta description, and missing title tag, etc. Almost these matters we have already picked up in the above description. I hope you’ll understand how you have to fix them.

Wrapping Up

All in all, above information, explore some necessary tasks that make better your website SEO in very short time. This is not a full and final list, as there is so much more that you can do for better SEO and every morsel of information clues to another innovation.

Now it is your turn time. What are your first and significant challenges when you decide to audit your website? What is the more suitable solution you create?

I would love to read what are you doing differently for your website auditing. So write your story in the comment below and help everyone who wants to make the own website SEO audit better.

Let’s start and make discussion.